"Elf-Disclosure" for June 2024

We've been in production for 11-months  old now, and June was characterized by "growing pains" re our Debrid-based streaming, a revenue sharing initiative with developers, a range of new product options, and an unfortunate, storage-destroying outage

old now, and June was characterized by "growing pains" re our Debrid-based streaming, a revenue sharing initiative with developers, a range of new product options, and an unfortunate, storage-destroying outage  .

.

This is the first month we've had a previous year's report to reflect back on. To see how far we've come, take a look at the June 2023 Elf-Disclosure report!

To get us started, here are some shiny stats for June 2024, followed by a summary of some of the user-facing changes announced this month in the blog...

Stats

| Money | Apr 2024 | May 2024 | June 2024 |

| Cluster | $1802 | $2925 | $2540 |

| Store | $56 | $56 | $76 |

| CI | $100 | $100 | $100 |

| Cloud | $20 | $20 | $20 |

| Development | 90h / $13,500 | 90h / $13,500 | 150h / $22,500 |

OSS Sponsorship OSS Sponsorship | $35 | $35 | $86.30 |

| Total Expenses | $15,513 | $16,636 | $25,580 |

| Income | $2,021 | $1,630 | $1,215 |

| Income % of cash expenses | 100.4% | 52% | 39.4% |

| Income % of all expenses | 13.02% | 9.8% | 4.75% |

| Focus | Apr 2024 | May 2024 | Jun 2024 |

| Subscribers | 360 | 338 | 370 |

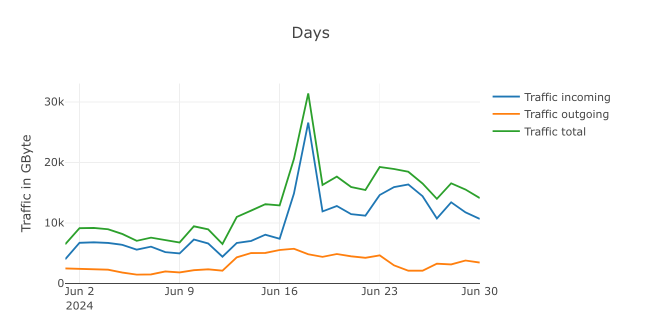

| Ingress | 256TB | 302TB | 296TB |

| Egress | 66TB | 59TB | 100TB |

| Pods | 3910 | 3188 | 4513 |

| Focus | May 2024 | Jun 2024 | Jul 2024 |

| Unique visitors | 18.7K | 18.9K | 21.7K |

| Total pageviews | 58.5K | 56.3K | 62.6K |

| Discord members | 826 | 921 | 1056 |

| YouTube subscribers | - | 72 | 131 |

| Focus | May 2024 | Jun 2024 | Jul 2024 |

| Total invested thus far | $139,683 | $156,319 | $181,899 |

| Total revenue | $6,841 | $8,471 | $9,686 |

| Income % of total invested | 4.88% | 5.42% | 5.32% |

Resources

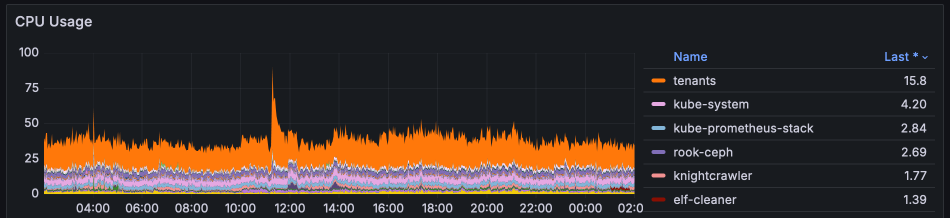

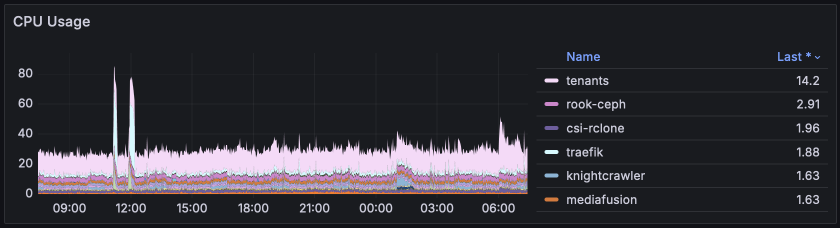

The stats below indicate CPU cores used (not percentage), and illustrate that tenant workloads remain the highest consumer of CPU, followed by an ever-decreasing collection of internal services. In previous months, the next-highest CPU consumer was usually Traefik, since it monitors changes to cluster resources to reload its config, and our resources change a lot. During June, I discovered the --providers.kubernetescrd.throttleduration commandline argument, which, now that it's dialed back to 60s, has made Traefik far less of a resource hog!

Various other optimizations applied during the month have reduced the non-tenant workloads, leaving more resource available for tenant apps!

The "last" values on the chart are specific to when the snapshot was taken, but compared to the previous month, there's not a lot of change in overall tenant CPU usage (which is good, most of the resource pressure is on network and storage I/O).

Once again, observant readers will note changes in the month-to-month kubectl top nodes output - this snapshot was taken after moving our Plex pods to the 10Gbps giants, and providing "ranger" nodes to the "plus" power users.

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dwarf01 233m 2% 23173Mi 72%

dwarf02 233m 2% 23497Mi 73%

dwarf03 202m 2% 23534Mi 73%

dwarf04 232m 2% 26750Mi 84%

dwarf05 188m 2% 23380Mi 73%

dwarf06 169m 2% 24128Mi 75%

dwarf07 217m 2% 24038Mi 75%

dwarf08 210m 2% 23913Mi 75%

dwarf09 187m 2% 23725Mi 74%

dwarf10 146m 1% 23642Mi 74%

elf01 4762m 29% 43858Mi 34%

elf02 3800m 23% 38494Mi 29%

elf03 2589m 16% 40445Mi 31%

elf04 2086m 13% 43306Mi 33%

elf05 1409m 8% 36800Mi 28%

elf06 2255m 14% 39544Mi 30%

elf07 3611m 22% 39399Mi 30%

elf08 1990m 12% 40921Mi 31%

elf09 4153m 25% 43534Mi 33%

elf10 1938m 12% 38451Mi 29%

elf11 2854m 17% 39092Mi 30%

elf12 70m 0% 12371Mi 9%

elf13 78m 0% 17228Mi 13%

fairy01 1664m 10% 43357Mi 33%

fairy02 994m 6% 44509Mi 34%

fairy03 1412m 8% 87154Mi 67%

giant01 1207m 10% 31006Mi 48%

giant02 1756m 14% 32590Mi 50%

giant03 3024m 25% 40936Mi 63%

gnome01 428m 5% 13795Mi 21%

gnome02 2269m 28% 41975Mi 65%

gnome03 1377m 17% 34816Mi 54%

goblin04 464m 3% 60072Mi 46%

goblin05 387m 3% 49374Mi 38%

goblin06 672m 5% 78009Mi 60%

ranger01 250m 1% 15440Mi 11%

ranger02 1096m 6% 16104Mi 12%

ranger03 1280m 8% 18408Mi 14%

ranger04 51m 0% 5666Mi 4%

ranger05 76m 0% 5115Mi 3%

Last month (May)'s for comparison:

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dwarf01 266m 3% 23369Mi 73%

dwarf02 177m 2% 23381Mi 73%

dwarf03 167m 2% 23191Mi 72%

dwarf04 173m 2% 25477Mi 80%

dwarf05 176m 2% 23728Mi 74%

dwarf06 159m 1% 23654Mi 74%

dwarf07 239m 2% 23515Mi 73%

dwarf08 224m 2% 23253Mi 73%

dwarf09 273m 3% 23955Mi 75%

dwarf10 180m 2% 23216Mi 72%

elf01 869m 5% 31220Mi 24%

elf02 1113m 6% 32581Mi 25%

elf03 1224m 7% 36480Mi 28%

elf04 1365m 8% 45817Mi 35%

elf05 717m 4% 37240Mi 28%

elf06 1129m 7% 23484Mi 18%

elf07 2480m 15% 42267Mi 32%

elf08 1875m 11% 38316Mi 29%

elf09 978m 6% 44215Mi 34%

elf10 1212m 7% 34618Mi 26%

elf11 1663m 10% 38939Mi 30%

elf12 2959m 18% 36174Mi 28%

elf13 1734m 10% 42492Mi 33%

elf14 1489m 9% 34886Mi 27%

elf15 1728m 10% 34203Mi 26%

elf16 4718m 29% 51986Mi 40%

fairy01 2906m 18% 74833Mi 58%

fairy02 964m 6% 45500Mi 35%

fairy03 576m 3% 32683Mi 25%

giant01 1540m 12% 30243Mi 47%

giant02 862m 7% 27471Mi 42%

giant03 2462m 20% 31634Mi 49%

gnome01 1098m 13% 26761Mi 41%

gnome02 382m 4% 12228Mi 19%

gnome03 554m 6% 10844Mi 16%

goblin04 498m 4% 59342Mi 46%

goblin05 377m 3% 49317Mi 38%

goblin06 871m 7% 70292Mi 54%

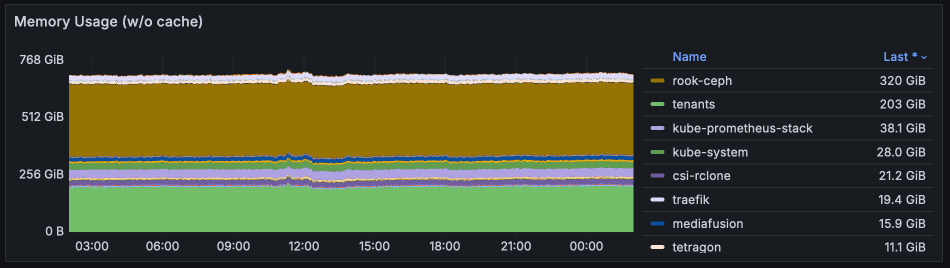

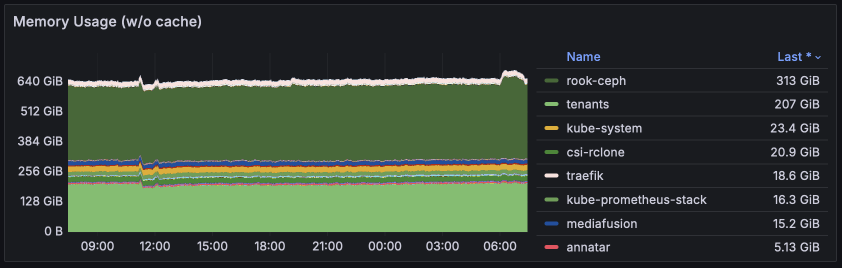

This graph represents memory usage across the entire cluster. By far the largest consumers of RAM is rook-ceph, since ceph will basically take all the RAM you give it, for caching / performance.

Why is the RAM usage for ceph so similar to last month, even though ElfStorage is gone? Because the 10 x HDD nodes which provided ElfStorage are still in service, serving some symlink volumes which haven't yet been migrated away. We should see this numbers change significantly in July.

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dwarf01 233m 2% 23173Mi 72%

dwarf02 233m 2% 23497Mi 73%

dwarf03 202m 2% 23534Mi 73%

dwarf04 232m 2% 26750Mi 84%

dwarf05 188m 2% 23380Mi 73%

dwarf06 169m 2% 24128Mi 75%

dwarf07 217m 2% 24038Mi 75%

dwarf08 210m 2% 23913Mi 75%

dwarf09 187m 2% 23725Mi 74%

dwarf10 146m 1% 23642Mi 74%

elf01 4762m 29% 43858Mi 34%

elf02 3800m 23% 38494Mi 29%

elf03 2589m 16% 40445Mi 31%

elf04 2086m 13% 43306Mi 33%

elf05 1409m 8% 36800Mi 28%

elf06 2255m 14% 39544Mi 30%

elf07 3611m 22% 39399Mi 30%

elf08 1990m 12% 40921Mi 31%

elf09 4153m 25% 43534Mi 33%

elf10 1938m 12% 38451Mi 29%

elf11 2854m 17% 39092Mi 30%

elf12 70m 0% 12371Mi 9%

elf13 78m 0% 17228Mi 13%

fairy01 1664m 10% 43357Mi 33%

fairy02 994m 6% 44509Mi 34%

fairy03 1412m 8% 87154Mi 67%

giant01 1207m 10% 31006Mi 48%

giant02 1756m 14% 32590Mi 50%

giant03 3024m 25% 40936Mi 63%

gnome01 428m 5% 13795Mi 21%

gnome02 2269m 28% 41975Mi 65%

gnome03 1377m 17% 34816Mi 54%

goblin04 464m 3% 60072Mi 46%

goblin05 387m 3% 49374Mi 38%

goblin06 672m 5% 78009Mi 60%

ranger01 250m 1% 15440Mi 11%

ranger02 1096m 6% 16104Mi 12%

ranger03 1280m 8% 18408Mi 14%

ranger04 51m 0% 5666Mi 4%

ranger05 76m 0% 5115Mi 3%

Last month (May 2024)'s for comparison:

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dwarf01 266m 3% 23369Mi 73%

dwarf02 177m 2% 23381Mi 73%

dwarf03 167m 2% 23191Mi 72%

dwarf04 173m 2% 25477Mi 80%

dwarf05 176m 2% 23728Mi 74%

dwarf06 159m 1% 23654Mi 74%

dwarf07 239m 2% 23515Mi 73%

dwarf08 224m 2% 23253Mi 73%

dwarf09 273m 3% 23955Mi 75%

dwarf10 180m 2% 23216Mi 72%

elf01 869m 5% 31220Mi 24%

elf02 1113m 6% 32581Mi 25%

elf03 1224m 7% 36480Mi 28%

elf04 1365m 8% 45817Mi 35%

elf05 717m 4% 37240Mi 28%

elf06 1129m 7% 23484Mi 18%

elf07 2480m 15% 42267Mi 32%

elf08 1875m 11% 38316Mi 29%

elf09 978m 6% 44215Mi 34%

elf10 1212m 7% 34618Mi 26%

elf11 1663m 10% 38939Mi 30%

elf12 2959m 18% 36174Mi 28%

elf13 1734m 10% 42492Mi 33%

elf14 1489m 9% 34886Mi 27%

elf15 1728m 10% 34203Mi 26%

elf16 4718m 29% 51986Mi 40%

fairy01 2906m 18% 74833Mi 58%

fairy02 964m 6% 45500Mi 35%

fairy03 576m 3% 32683Mi 25%

giant01 1540m 12% 30243Mi 47%

giant02 862m 7% 27471Mi 42%

giant03 2462m 20% 31634Mi 49%

gnome01 1098m 13% 26761Mi 41%

gnome02 382m 4% 12228Mi 19%

gnome03 554m 6% 10844Mi 16%

goblin04 498m 4% 59342Mi 46%

goblin05 377m 3% 49317Mi 38%

goblin06 871m 7% 70292Mi 54%

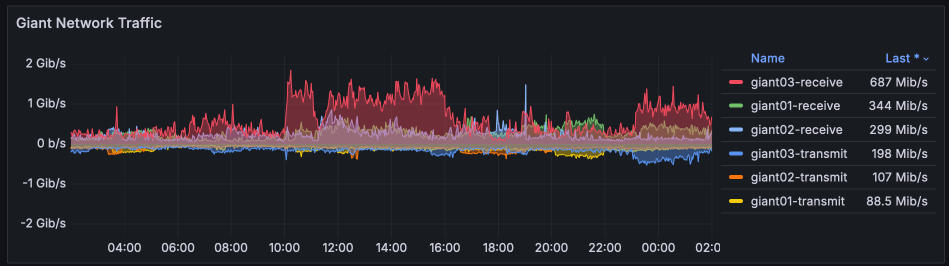

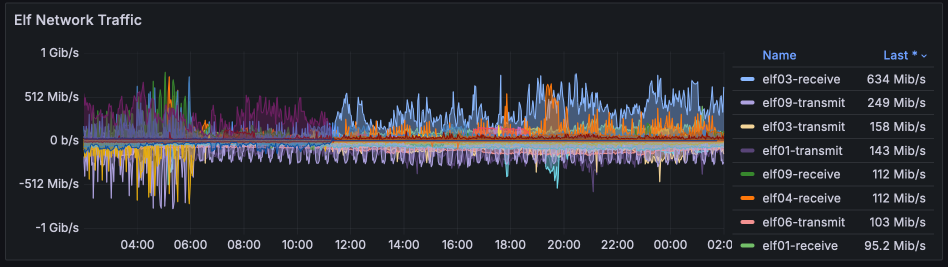

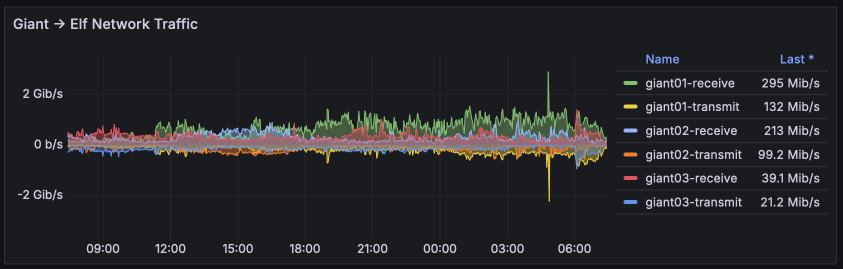

The function of the giants (10Gbps) nodes has changed recently, due to the shake-up from the RealDebrid rate-limiting saga (below). After a successful trial, most Plex instances are now running directly on the Giants, allowing plex "room to run" without hitting the 1Gbps throughput limit we experienced on the Elves. This means far more efficient Zurg->Plex communication, and allows the 1Gbps Elves to be used for more high-contention, CPU-heavy applications.

Having a full 10Gbps available for DirectPlaying from Plex also opened the door to "Boost" plans, which allow a user to (even for just 24h) "boost" their Plex egress bandwidth from the default 150Mbps all the way to 1Gbps if required.

Last month (May 2024)'s for comparison:

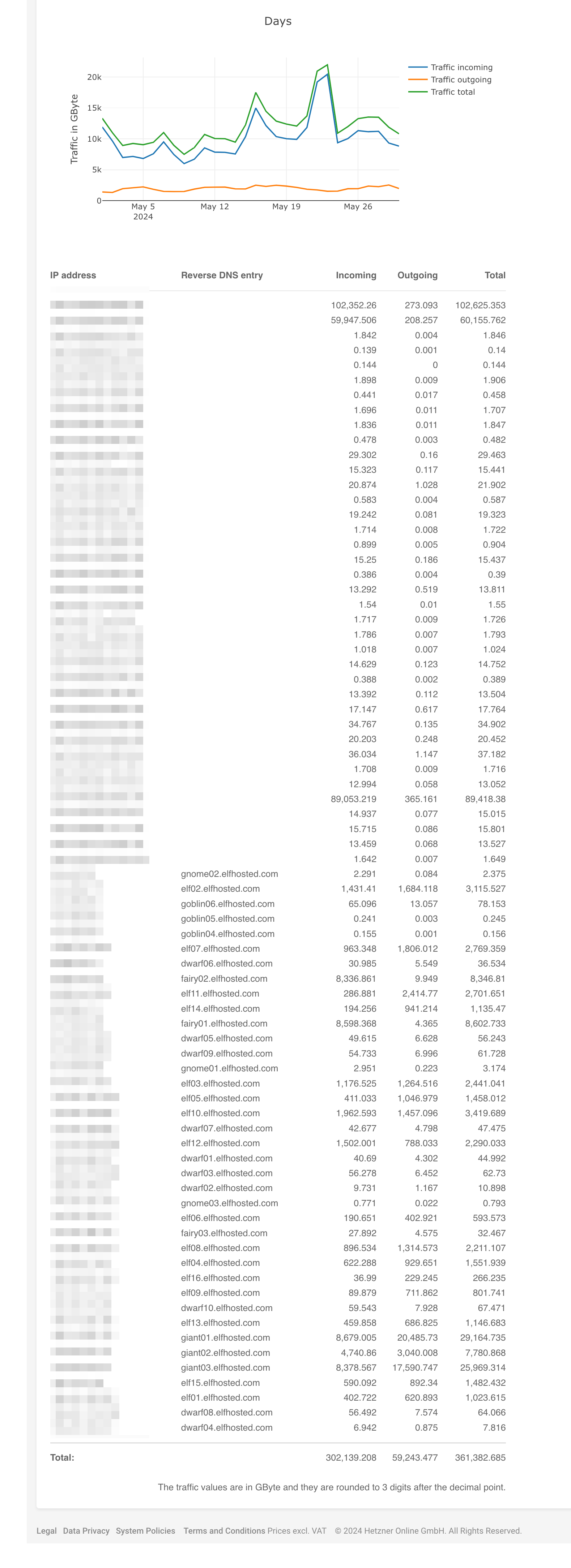

These are the traffic stats for egress from Hetzner. They exclude any traffic to/from Hetzner Storageboxes or ElfStorage, since this traffic is not classified as "external".

I've stopped recording the individual node details because given the frequence of change during the month (thanks to Hetzner's hourly billing), those detail add no useful value.

Ingress / Egress stats for Jun 2024

Last month (Apr 2024)'s for comparison:

Ingress / Egress stats for May 2024

The ceph section has been removed from this and future reports, since ElfStorage is no longer a product we provide (details below).

Retrospective

Preparing for multiple datacenters

We've been talking for months about our dependency on Hetzner for our infrastructure, and the issues that our non-european Elves have with throughput from the Falkenstein datacenter. It's been impractical (and unaffordable) to establish an ElfHosted cluster in other regions when users were streaming media from their ElfStorage, but with the rise of the Infinite Streaming bundles with RealDebrid, some of those challenges go away.

I'm working on / testing an update to our cluster design which would allow users run their services in a US-based ElfHosted cluster, without the dependency on storage which currently binds us to the spinning rust in the Hetzner datacenter.

In pursuit of this testing / redesign, I created the great #fluxpocalypze of June 2024...

Fluxpocalypse

What happened

An oversight when rearranging our GitOps infrastructure to support multiple clusters, caused flux to remove-and-re-add a resource which points to another collection of resources, which define apps, storage, and namespaces for each tenant.

Consequentially, when I moved a .yaml file from one folder to another while the prune: true value was set, flux was instructed to remove and then promptly recreate all tenant resources, including the namespaces which housed ElfStorage volumes (which are usually set to be retained at all costs). Removal took about an hour, and once triggered, could not be stopped.

We had no backups of ElfStorage data (>114TB), and snapshots of the app configs were stored in the namespaces themselves. There were no offsite backups (a design flaw I'm working through with the storage refactor ATM).

The fallout

Users were alerted quickly to the impending loss of data, and the ElfVengers stepped up to help triage the support requests.

A #fluxpocalypse Discord thread was established for Q&A, and refunds were unconditionally given to compensate for the loss of ElfStorage services / data. In total $880 of refunds were processed over a period of 2 days.

I opted not to resume offering ElfStorage at all, and deprecate it as a product, refunding any prepaid subscriptions. (ElfStorage no longer fitted our "Infinite Streaming" niche, and manually rebuilding users' lost libraries at 1Gbps all simultaneously would have been technically impractical).

After the deletion and excision of ElfStorage was completed, tenant apps were restarted with fresh (blank) configs. Tenants were incredibly understanding and supporting, expressing their commitment to remain ElfHosted

The ElfVengers in particular saved the day in this case, working for hours after I'd clocked out, to provide information to users and to process refunds. Thanks team!

Life after ElfStorage

Although we no longer provide storage for user data (other than application config and symlinks), users are able to attach their own cloud storage (BYO Storage) to their apps. The most effective and affordable solution is a Hetzner Storagebox, since these are housed in the same datacenter as our infrastructure for low-latency connection, and can easily be "bolted onto" users' apps.

Not having the "baggage" of ElfStorage also helps with the multi-cluster design - it's simpler now to build a solution wherein a user could just opt into a different cluster, and we could optionally migrate their app configs (a couple of GBs) and symlinks.

We've not completely transition all of our storage off the HDD-based nodes though - this will take another week or so, after which these can be removed, realizing a cost saving of ~$500/month. (of course, offset against the loss of a portion of subscription revenue to cover these costs).

Real Debrid blocks, rate-limits

Half-way through June, our Hetzner IP ranges were once again blocked by RealDebrid - this time, even our IPv6 ranges couldn't download, and users were unable to stream their RealDebrid libraries through Plex, etc.

We scrambled to build a complex solution using BYO VPN and gluetun, which worked, but the barrier to entry for most users would have been too high. About 30 min after celebrating our geeky victory, @mxrcy pointed to a simpler solution using CloudFlare WARP, and Zurg's socks5 proxy support.

For a while, we were offering 2 types of VPN for Zurg - either WARP or a BYO VPN via gluetun.

A few days later, the landscape changed again - our IP ranges are unblocked (IPv4 and IPv6), but RealDebrid are applying "fair use" limits to all accounts. Once again, users found themselves soft-banned and unable to stream their contents, and much hand-wringing and consternation ensued!

Within in a few days, @yowmamasita had identified the causes of high bandwidth usage within Zurg (repair loops, and rclone VFS aggressive caching), and made several improvements. (ElfHosted users benefit from Zurg improvements immediately since we are sponsors), making the RD situation "manageable" if you're not doing big library-building operations.

Finally, by the end of the month, @yowmamasita had baked into Zurg support for multiple RD tokens, such that you can use your primary token to manage your library and download, but if that token gets soft-banned for hitting a rate-limit, Zurg will move onto the next token just for downloads, such that your library stays managed by your main account.

Several of our Elves have tested this design and found it to work exactly as advertised, freeing them from "RD Jail". An elegant and fair solution!

Bandwidth Boosts available

Several users requested an option to pay more to increase their Plex egress limits (either to share with a larger collection of friends & family, or simply as "peace-of-mind" that their streaming setup will be reliable). We initially created 300Mbps packages on the Elves, but now that we've migrated streaming to the Giant nodes, we can "boost" Plex / Jellyfin / Emby all the way up to 1Gbps!

See the "Booster Packs" category in the store for details!

Plus plans introduced

An off-the-cuff request from a tenant for hardware transcoding support led to the creation of a new family of products, the "Infinite Streaming Plus" plans.

Normally, users' apps have to content with other users apps for CPU and network bandwidth, and all 4K / HEVC / CPU transcoding is prohibited. While we resource the cluster such that apps perform to reasonable expectations, users may prefer more CPU / RAM / bandwidth, and hardware transcoding for their apps.

A Plus plan gets a user one of only 4 "slots" on a dedicated, uncontended "ranger" node (same i9-9900K, 1Gbps hardware as the elves), on which hardware transcoding is fully allowed, and limits are very few - currently, the only hard limits are 50% of entire node's resources.

This means a user could run their Infinite Streaming stack, consuming up to 500Mbps of bandwidth when available (50% of which is contended), and take full advantage of the QSV support for hardware transcoding.

To prevent misunderstandings / abuse, Plus packages require you to already have a regular Infinite Streaming bundle, which you'll "trade in" for the Plus package. An ElfVenger can help transition you to Plus, and all your apps configs will remain unaffected.

Plus packages are $49/month, which is close to what a regular Infinite Streaming bundle with a 300Mbps booster pack would cost!

Riven rises

Riven has been the darling of our community in the past month, receiving rapid development updates (sometimes too rapid!), with an active collection of users providing feedback in the #elf-riven channel.

Part of the attraction of Riven is that we have an app developer in Discord, actively engaging users and responding to feedback / requests. One of the the "attractions" that ElfHosted offers to developers is that we have a community / platform "ready-to-go", and we can provide more support than trying to build a fresh community from scratch.

Having close proximity to our developers also makes our users aware of how much work / effort they put in, and we support developers (the "revenue sharing" model) by contributing 30% of their apps' subscription revenue back to them.

Our store reporting is not advanced enough (yet) to calculate parts of a month, or trials-vs-subscriptions, so for June 2024, our contribution to Riven development is simply (riven pods x daily subscription price x 30% x active days), which totals a back-of-the-envelope ElfHosted contribution for Riven of 38 (pods) x 0.30/day x 30% x 15 days = **51.30**.

If you'd like to support Riven in addition to the 30% of your subscription, join me in sponsoring here

Releases Revealed

Now that we've bedded in the new release process, we're getting daily release notes posted to #elf-announce, as the maintenance window starts. These are a little too verbose currently for my linking, but they do provide critical feedback re app changes, and have already been well-received. Look out for more polish to come!

Spanky does Support

We reported last month that general support issues was moving Discord topics in #elf-support, to be better "bottified".

Our newest ElfVenger, @Mxrcy, has implemented Elf-specific "cogs" for the "Red" Discord bot (ours is named "Spanky") to support our public ticket system. It's getting easier now to pull specific support queries out of a general chat channel, and turn these into a support topic, along with options for private discussion, transcription, etc. Of particular note, having support topics be "public" has increased opportunties for users to help each other, while reducing support load on the ElfVengers.

The ticket system passed its "trial-by-fire" during the #fluxpocalypse, where it served to quickly manage all the refunds / individual support issues while keeping primary channels free and available for communication.

Elf-errals deprecated

Last month's report refactored our referral system, but this month we're reporting that referrals are deprecated, pending some re-work. Careful review revealed that the Wordpress plugin we were using was vulnerable to manipulation given our complex array of low-cost products, and our daily billing cycle.

I'm interested in feedback re how referrals should work - it may be possible, for example to only do referrals on infinite streaming bundles, rather than on all products. (I.e. previously it was possible for a referrer to earn $5 on a $0.10/day order!)

Discord branding updated

You might have noticed the Discord server changed its name from "Funky Penguin" to "ElfHosted / Funky Penguin". This was done to better align with the ElfHosted branding, since the number of Elves in Discord is now greater than the number of geeks, and I wanted to reduce support overhead / confusion on behalf of new users.

What's coming up?

No more grandfathered pricing

It's painfully obvious that we're not profitable yet - we're still heading in the right direction (all the refunds this month didn't help!), but the initial pricing on our "legacy" subscriptions (pre Apr 2024) was mostly guesswork, and is not breaking even.

When we did a data-driven repricing in Apr, existing subscriptions were not repriced (both as a goodwill gesture and because it was technically hard to do).

As of 1 Aug, any remaining grandfathered subscriptions will be repriced on renewal to match current rates.

PlexTraktSync is a tool which syncronises a Plex library with a Trackt library. Why would you want such a thing? One option we're exploring is to transition a user from "Infinite Streaming" (plex_debrid-based) to "Advanced Infinite Streaming" (aar-based), which would require re-creating Plex libraries. The idea would be to use Plextrackt to sync a Trakt list with an existing Plex library, and then feed that Trackt list to Radarr/Sonarr, to bootstrap the arr-based library collection.

Storage re-refactoring

With the loss of ElfStorage, and the goal of multi-region ElfHosted clusters, the refactoring of storage is continuing (slowly and carefully). I hope to have tangible news to report on the multi-cluster migration options, in next month's report.

Offering free demos ( AudioBookshelf)

AudioBookshelf)

Audiobookself demo coming soon

I've offered a free demo instance to the AudioBookshelf community, in line with the plan below. We should see this eventuate during June!

Nothing gets my attention on a new app like a live demo. I've considered reaching out to open source projects who don't have their own online demo, and offering to host a self-resetting demo for them.

This approach has been successful with the Stremio Addons, and I think it'd be effective at gaining exposure to more niche communities.

A hosted demo would provide their potential users the opportunity to evaluate the app "live", and would also drive more traffic / recognition / SEO juice towards ElfHosted.

If you've got an open-source, self-hosted app and you'd like a free demo instance hosted, hit me up!

(We are currently donating torrent hosting to https://freerainbowtables.com)

Your ideas?

Got ideas for improvements? I'd love to hear them, post them in #elf-suggestions!

How to help

If you'd like to make a donation in recognition of our infrastructure costs, our open-source resources, or our friendly support, a simple donation product is available at https://store.elfhosted.com/product/elf-love/

Another effective way to help is to drive traffic / organic discovery, by mentioning ElfHosted in appropriate forums such as Reddit's r/selfhosted, r/seedboxes, r/realdebrid, and r/StremioAddons, which is where much of our target audience is to be found!

Join us!

Want to get involved?

Want to get involved? Join us in Discord and come and test-in-production!

What users say..

Here's what some of our usersfriends say..

I am new here, but today I learned realized that Elfhosted is one of the best free and open source software communities I've seen, and FOSS communities have been at the center of my life since the 90s (Perl, PHP, Symfony, Drupal, Ethereum, etc.). Great open software built by great people who care = great community, and that is something special.

You've done an amazing job @Funky Penguelf with the platform you provide and this place has an awesome mix of active community caretakers and software creators that I've seen here so far like BSM, Spoked, LayeZee and other elf vengers. Keep up the energy, productivity and community and take time to enjoy it and appreciate each other!

@skwah (Discord)

@skwah (Discord)

I self host and share a fully automated ‘arr stack with Plex. Been doing so for around 4 years. Also recently got into real debrid and hosting a Comet and Annatar for Stremio. The amount of time and head banging I’ve put into it is in the hundreds to thousands of hours. From setting it up to keeping it running smoothly. Let’s not forget the cost of my server and how much it cost to keep it running.

Anyway I wanted to see what ElfHosted was about to compare. Yeah I had the whole thing setup in just a few hours. It also passes the headache of maintaining it to ElfHosted. Will I keep it no because nerdy things and maintaining my server are my hobby and quirky passion project. Will I recommend it to my friends who don’t have the money up front to buy a server, the knowledge to maintain it or desire.

Just my server alone was $2k. Power cost to keep it on yearly is $250ish, annual memberships to RD, Usenet and indexers are around $100. Then whatever a value my free time at. Which is currently at minimum my hourly pay at work or more. Yeah so take the monthly cost of all that and compare to ElfHosted Ultimate Stream package at $39 monthly, add RD to the cost and get nearly all your time back is incredibly cheap.

Lastly it seems like a lot of people forget how quickly an ultimate cable package used to cost. Or how quick paying for every stream service would add up to. Which when using ElfHosted with RD is essentially and more what you get. Quick hint it’s far above the asking price.

/u/MMag05. (Reddit)

/u/MMag05. (Reddit)

As a happy Elfhosted customer—who also self hosts MANY things across about 10 severs (dedicated, VPSes, and VMs running on Synology), I wouldn’t switch to self hosting the services I get from Elfhosted. They just work with very little effort configuring things, and the support the owner and his team provides is second to none. Plus I love being part of a fledgling—but quickly growing—enterprise.

/u/jatguy. (Reddit)

/u/jatguy. (Reddit)

I recently found ElfHosted and decided to start out with the Infinite Starter Kit. Within a week I realized that this was for me and upgraded to the Hobbit plan. Give it another week and I was up to the Ranger plan.

I just love the simplicity and the fact that things just work. For years I've ran a home server and between the constant maintenance and always upgrading harddrives, it became apparent I wanted to make it easier on my self. Enter ElfHosted.

Setup was super easy with the guided documentation and the discord community. It seems that somebody is available at all hours of the day to help with questions. I started with the Aars, which I knew from my prior hosting... but saw a newer product called Riven. I decided to jump in feet first. I enjoy being on the front end of an up and coming replacement for the Aars and will soon be upgrading to the annual plan!

@.theycallmebob. (Discord)

@.theycallmebob. (Discord)

I’ve been using this service for a while now, and honestly, it’s a game-changer compared to anything else I’ve tried for managing my media library. The support is fantastic—super quick, and if the staff aren’t around (which rarely happens), the community steps up right away. I can’t imagine going back to any other platform.

Before this, I had my own setup with a NUC, NAS, and tools like Sonarr and Radarr. It worked pretty well for a while, and my internet speed was high enough to stream without any buffering. But in the end, it wasn’t worth the time or headache of managing all the storage and keeping everything running smoothly.

Now, with this service, everything runs smoothly in 1080p+ with no buffering issues. The interface is really easy to use, which makes managing everything a breeze. Plus, having a whole community of smart people available for guidance is a huge bonus.

I was sold from the start, which is why I quickly upgraded from a 1-month to a 3-month subscription, and I’m planning to switch to a 1-year plan soon. This service totally pays for itself, and I’m sure you won’t be disappointed. It’s been really impressive.

@seapound (Discord)

@seapound (Discord)

Best possible options for anyone looking for the do-it-all option along with the best customer service ive experienced in this space so far. Id rate it a 6 if I could but its limited to 5/5...

@hashmelters (Discord)

@hashmelters (Discord)

(responding to a Reddit thread re the cost of ElfHosted vs mainstream streaming / self-hosting):

I didn't know that the goal of this project was to compete with large companies running/renting entire DCs. I was under the impression that the goal of this project was to manage the updating of almost selfhosted applications on a shared platform with other users. Basically, be my sysadmin for me.

That being said, paying for services is the 'easy button'. There is a real world cost incurred for the time saved. Time is money. Time is the most valuable currency that exists. Once time is spent, it's forever lost, one cannot retrieve it again (yet). In my mind, there are 3 options for use of time with respect to: mainstream, selfhosting, elfhosted.

-

mainstream - my time is valuable and I don't want curated content and I don't care what content that I have the ability to consume. I only like what's popular.

-

elfhosted - my time is valuable, I want my own curated content without being forced to browse past the same damn entry 500 times just to find out that I can't watch the movie I want because it's not available in my current location or was removed last week from mainstream providers.

-

selfhost - I care about costs and I have nothing but time to waste or I want to learn about the backend of the systems involved. I'll pay for my own VPS/homelab, electricity, manage the OS, manage app updates, figure out how to make the apps talk nice to each other, create my own beautiful frontend.

I know how much my time is worth, does that reddit poster know how much their time is worth? Without knowing what you are worth, you can't make effective capital expenditures with respect to the time it will take to recoup the capital.

I know I don't need elfhosted at all for my use case. I choose to stay with elfhosted because it's my 'easy button'. It's an efficient capital expense for the amount of time it saves me managing my own hardware, apps and saves me electricity costs. I'm also in a situation where I don't have upload bandwidth from my home to serve HD content to myself remotely. If I lived back in a city, I would still be here. My time is worth $$/hr.

@cobra2 (Discord)

@cobra2 (Discord)

"Just wanted to check in here and let @Darth-Penguini and anyone/everyone else know...WOW. I have been struggling with storage for years, maintenance of Docker containers, upkeep, all of it. Elfhosted is so freeing. It's an amazing service that I hope to be a member of for a long, long time!"

@Fingers91 (Discord)

@Fingers91 (Discord)

"I just have to say, I am an incredibly satisfied customer. I had been collecting my own content for nearly 20 years. Starting off with just a simple external HD before eventually graduating to a seedbox with 100TB of cloud storage attached and fully automated processes with Sonarr and Radarr . However, the time came when the glory days of unlimited Google Drive storage ended. I thought my days of having my full collection at my fingertips via :plex: were behind me, until I found Real-Debrid and ElfHosted.

Now I essentially have the exact same access to content as I had before, but even better. Superior support and community involvement. Content is available almost immediately after being identified. A plethora of tools at my fingertips that give me more control and automation than ever before. Wonderfully well done and impressive! I am looking forward to being a customer for a very long time! Massive kudos to @funkypenguin 🤟

@BSM (Discord)

@BSM (Discord)

"I would recommend ElfHosted to anyone. It has been great so far and made life a lot easier than running my own setups. If you’re in the fence give them a try and help support this great community."

Zestyclose_Teacher20 (Reddit)

Zestyclose_Teacher20 (Reddit)

"thanks for the help and must say this is the best host I every had for my server 🙂 10/10 🙂 All other places I have try have I got a lot buff etc. Your host can even give me full power on a 4K Remux on 200GB big movie file . That's damn awesome 😄"

@tjelite (Discord)

@tjelite (Discord)

"What an amazing support system these guys have Chris and Layzee i think it was! Both are very patient with me even though I am a newbie at all this. Very thorough and explained everything step by step with me

I couldn’t ask for anything better than the service I have received by these guys! Happy happy client❤️"

@dead.potahto (Discord)

@dead.potahto (Discord)

"Very happy customer. Great service"

@ronney67 (Discord)

@ronney67 (Discord)

"Very good customer service, frequent updates, and excelent uptime!!!!!"

@ed.guim (Discord)

@ed.guim (Discord)

"I had my own plex-arrs setup on hetzner for years. Yesterday I deleted everything as elfhosted has gone above and beyond it. And it has a fantastic, active community as well! Very friendly, helpful and like-minded folks always willing to help and improve the system. Top notch!"

@alon.hearter (Discord)

@alon.hearter (Discord)

"Absolutely Amazed with the patience and professionalism of all Elf-Venger Staff including bossman penguin❤️"

@dead.potahto (Discord)

@dead.potahto (Discord)

"@BSM went above and beyond to make sure I had all the one on one support needed with my sub. Thank you for your patience! Elfhosted continues to be Elftastic !!"

@bfmc1 (Discord)

@bfmc1 (Discord)

"really enjoying the service from elfhosted. The setup is really easy from the guides on the website. And the help on the discord channel is really quick."

@jrhd13 (Discord)

@jrhd13 (Discord)

"Support is amazing, and once you find a setup which works best for you it works perfectly, very happy 😊"

@fiendclub (Discord)

@fiendclub (Discord)

"great fast service, resolved my problem and really friendly"

@allan.st.minimum (Discord)

@allan.st.minimum (Discord)

"Great service and sorted out a billing issue super quick and easy."

@scottcall707 (Discord)

@scottcall707 (Discord)

"Very friendly support, resolved a problem with my account! I also appreciate the community that has been built around the service!"

@leo1305 (Discord)

@leo1305 (Discord)

"excellent customer service and very fast replies"

@yo.hohoho (Discord)

@yo.hohoho (Discord)

"Loved the simplicity, experience and support"

@y.adhish (Discord)

@y.adhish (Discord)

"Very friendly help as always, problem solved, one happy elf here!"

@badfurday0 (Discord)

@badfurday0 (Discord)

"Great Helpful and Fast support. Thanks!"

@.mxrcy (Discord)

@.mxrcy (Discord)