"Elf-Disclosure" for Apr 2024

We're 9-months old now, and have passed the one-month mark since the "new" (April 2024) pricing, which normalized resource-heavy "infinite debrid" apps, based on measure usage data.

We moved a little more slowly and broke a little less things, testing and ultimately rolling out an update to RDTClient to bring our auto-updating image up-to-date with the upstream code.

(We then totally "cowboyed" an IPv6 upgrade to deal with a surprise policy change by RealDebrid towards non-residential IPv4 addresses!)

Additionally, for the first time every, we broke even 4 on cash expenses for the month!

To get us started, here are some shiny stats for Apr 2024, followed by a summary of some of the user-facing changes announced this month in the blog...

Stats

| Money | Feb 2024 | Mar 2024 | Apr 2024 |

|---|---|---|---|

| Cluster4 | $2540 | $2540 | $1802 |

| Store | $56 | $56 | $56 |

| CI | $100 | $100 | $100 |

| Cloud | $20 | $20 | $20 |

| Development 3 | 120h / $18,000 | 90h / $13,500 | 90h / $13,500 |

| $35 | $35 | $35 | |

| Total Expenses | $20,870 | $16,251 | $15,513 |

| Income | $1,225 | $1,525 | $2,021 |

| Income % of cash expenses | 42.6% | 53.14% | 100.4% 4 |

| Income % of all expenses | 5.87% | 9.38% | 13.02% |

| Focus | Feb 2024 | Mar 2024 | Apr 2024 |

|---|---|---|---|

| Subscribers | 325 | 413 | 360 |

| Ingress | 52TB | 150TB | 256TB |

| Egress | 21TB | 58TB | 66TB |

| Pods | 4097 | 3446 6 | 3910 |

| Focus | Feb 2024 | Mar 2024 | Apr 2024 |

|---|---|---|---|

| Unique visitors2 | 13K | 15.7K | 18.7K |

| Total pageviews2 | 50K | 54.9K | 58.5K |

| Discord members | 525 | 702 | 826 |

| Focus | Feb 2024 | Mar 2024 | Apr 2024 |

|---|---|---|---|

| Total invested thus far 1 | $107,919 | $124,170 | $139,683 |

| Total revenue | $3,295 | $4,820 | $6,841 |

| Income % of total invested | 3.01% | 3.88% | 4.88% |

Resources

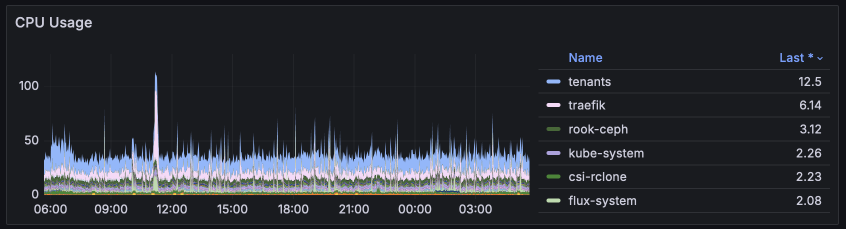

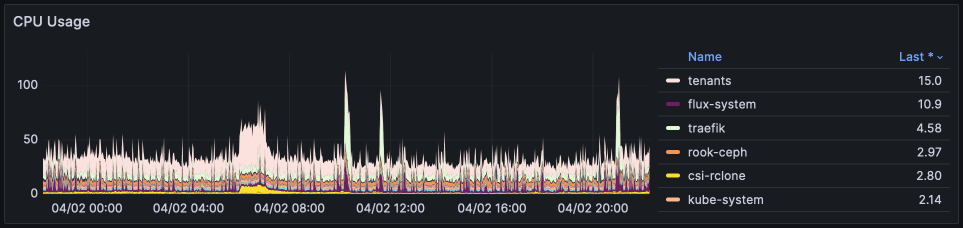

The stats below indicate CPU cores used (not percentage), and illustrate that after tenant workloads, our highest CPU consumer is traefik, which significantly spikes up during the daily maintenance window when there's a lot of pod "churn". We run Traefik as a daemonset, meaning we have > 22 pods, each of which gets "excited" when there's a lot of pod churn, and clocks in some heavy CPU usage, but this is still unexpected behaviour. Work will be undertaken in April 2024 to upgrade Traefik to v3 (it's a major update, needs careful testing), after which we'll revisit the CPU usage.

Until recently, Flux's CPU usage has also been high, with 26 shards (a-z) to spread out the load of reconciliations, but a recent refactor has significantly reduced this.

The "last" values on the chart are specific to when the snapshot was taken, but compared to the previous month, there's not a lot of change in overall tenant CPU usage (which is good, most of the resource pressure is on network and storage I/O).

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dwarf01 456m 5% 25932Mi 81%

dwarf02 316m 3% 24947Mi 78%

dwarf03 327m 4% 26507Mi 83%

dwarf04 353m 4% 26359Mi 82%

dwarf05 279m 3% 25536Mi 80%

dwarf06 323m 4% 26850Mi 84%

dwarf07 376m 4% 26702Mi 83%

dwarf08 290m 3% 25867Mi 81%

dwarf09 372m 4% 26528Mi 83%

dwarf10 328m 4% 26228Mi 82%

elf01 1533m 9% 28765Mi 22%

elf02 1958m 12% 33612Mi 26%

elf03 5858m 36% 26152Mi 20%

elf04 4438m 27% 59242Mi 46%

elf05 962m 6% 40678Mi 31%

elf06 996m 6% 30989Mi 24%

elf07 1856m 11% 36243Mi 28%

elf08 563m 3% 22437Mi 17%

elf09 1099m 6% 35952Mi 27%

elf10 1082m 6% 27806Mi 21%

elf11 2020m 12% 42999Mi 33%

elf12 1571m 9% 44910Mi 34%

elf13 1534m 9% 56472Mi 43%

elf14 2364m 14% 39203Mi 30%

elf15 3284m 20% 54181Mi 42%

elf16 915m 5% 35673Mi 27%

elf17 951m 5% 29772Mi 23%

elf18 2454m 15% 25660Mi 19%

elf19 775m 4% 45392Mi 35%

elf20 3122m 19% 31907Mi 24%

elf21 2299m 14% 41081Mi 31%

elf22 969m 6% 34268Mi 26%

elf23 1081m 6% 43334Mi 33%

elf24 1611m 10% 25941Mi 20%

fairy01 1618m 10% 81035Mi 62%

fairy02 902m 5% 49751Mi 38%

fairy03 969m 6% 35624Mi 27%

giant01 1249m 10% 25236Mi 39%

giant02 1096m 9% 25917Mi 40%

giant03 2496m 20% 25542Mi 39%

gnome01 373m 4% 13667Mi 21%

gnome02 623m 7% 27002Mi 42%

gnome03 1298m 16% 12507Mi 19%

gnome04 460m 5% 11687Mi 18%

goblin04 660m 5% 61026Mi 47%

goblin05 539m 4% 51855Mi 40%

goblin06 904m 7% 62636Mi 48%

Last month (Mar 2024)'s for comparison:

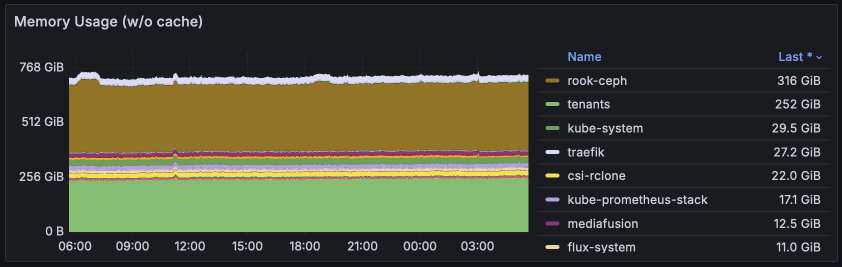

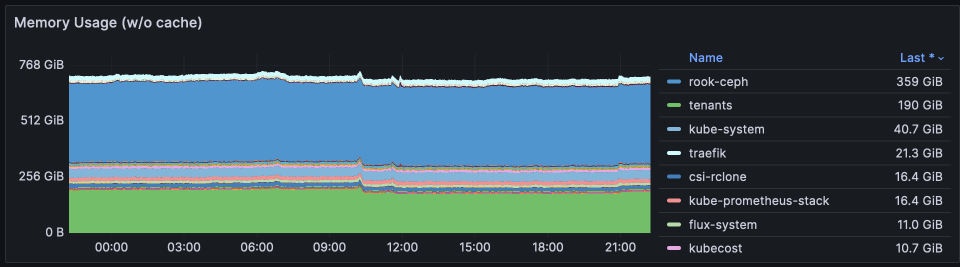

This graph represents memory usage across the entire cluster. By far the largest consumers of RAM is rook-ceph, since ceph will basically take all the RAM you give it, for caching / performance.

Interestingly, RAM usage for tenants has increased by ~25%, while CPU usage has remained relatively constant. This may indicate that there are more tenant apps (we know there are) which are idling most of the time, consuming RAM but not active CPU resources.

kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dwarf01 456m 5% 25932Mi 81%

dwarf02 316m 3% 24947Mi 78%

dwarf03 327m 4% 26507Mi 83%

dwarf04 353m 4% 26359Mi 82%

dwarf05 279m 3% 25536Mi 80%

dwarf06 323m 4% 26850Mi 84%

dwarf07 376m 4% 26702Mi 83%

dwarf08 290m 3% 25867Mi 81%

dwarf09 372m 4% 26528Mi 83%

dwarf10 328m 4% 26228Mi 82%

elf01 1533m 9% 28765Mi 22%

elf02 1958m 12% 33612Mi 26%

elf03 5858m 36% 26152Mi 20%

elf04 4438m 27% 59242Mi 46%

elf05 962m 6% 40678Mi 31%

elf06 996m 6% 30989Mi 24%

elf07 1856m 11% 36243Mi 28%

elf08 563m 3% 22437Mi 17%

elf09 1099m 6% 35952Mi 27%

elf10 1082m 6% 27806Mi 21%

elf11 2020m 12% 42999Mi 33%

elf12 1571m 9% 44910Mi 34%

elf13 1534m 9% 56472Mi 43%

elf14 2364m 14% 39203Mi 30%

elf15 3284m 20% 54181Mi 42%

elf16 915m 5% 35673Mi 27%

elf17 951m 5% 29772Mi 23%

elf18 2454m 15% 25660Mi 19%

elf19 775m 4% 45392Mi 35%

elf20 3122m 19% 31907Mi 24%

elf21 2299m 14% 41081Mi 31%

elf22 969m 6% 34268Mi 26%

elf23 1081m 6% 43334Mi 33%

elf24 1611m 10% 25941Mi 20%

fairy01 1618m 10% 81035Mi 62%

fairy02 902m 5% 49751Mi 38%

fairy03 969m 6% 35624Mi 27%

giant01 1249m 10% 25236Mi 39%

giant02 1096m 9% 25917Mi 40%

giant03 2496m 20% 25542Mi 39%

gnome01 373m 4% 13667Mi 21%

gnome02 623m 7% 27002Mi 42%

gnome03 1298m 16% 12507Mi 19%

gnome04 460m 5% 11687Mi 18%

goblin04 660m 5% 61026Mi 47%

goblin05 539m 4% 51855Mi 40%

goblin06 904m 7% 62636Mi 48%

Last month (Mar 2024)'s for comparison:

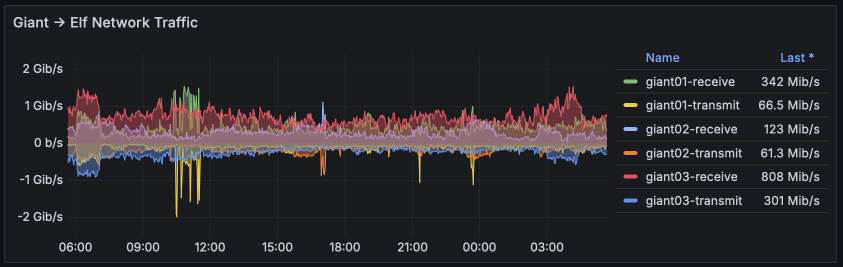

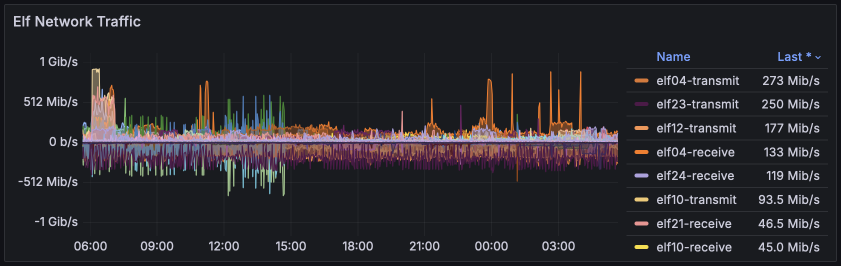

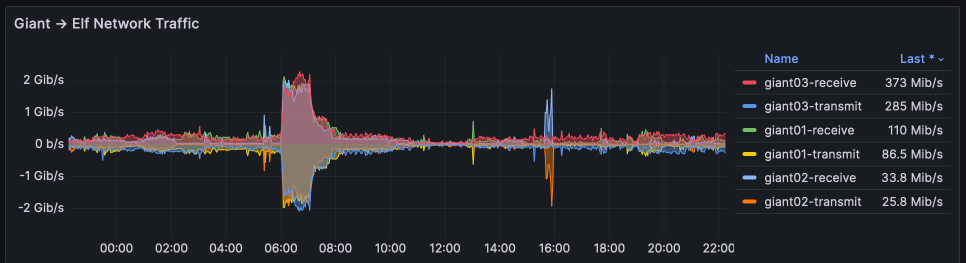

The 2 network graphs below to represent the two points where throughput varies.. the 10Gbps "giant" nodes (which are primarily used for ingress, as incoming Debrid content is passed to elves for streaming), and the 1Gbps "elf" nodes which are our general workhorses.

Compared to March's graphs, April's graphs show significantly more continuous traffic on both the Giants ((still ~10% of capacity) and the Elves (a more balanced spread). It's worth noting that during April, we also migrated some of the torrent clients onto the Giants, keeping a careful eye on total egress bandwidth, since we're billed for monthly bandwidth exceeding 20TB per 10Gbps node.

Last month (Mar 2024)'s for comparison:

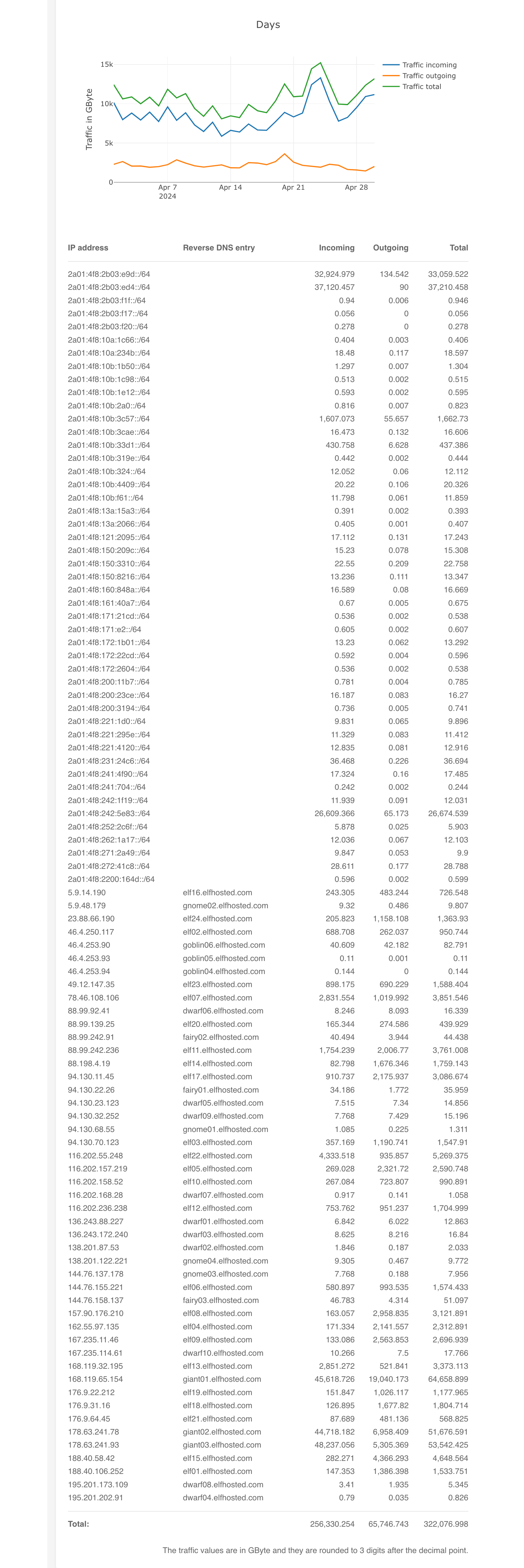

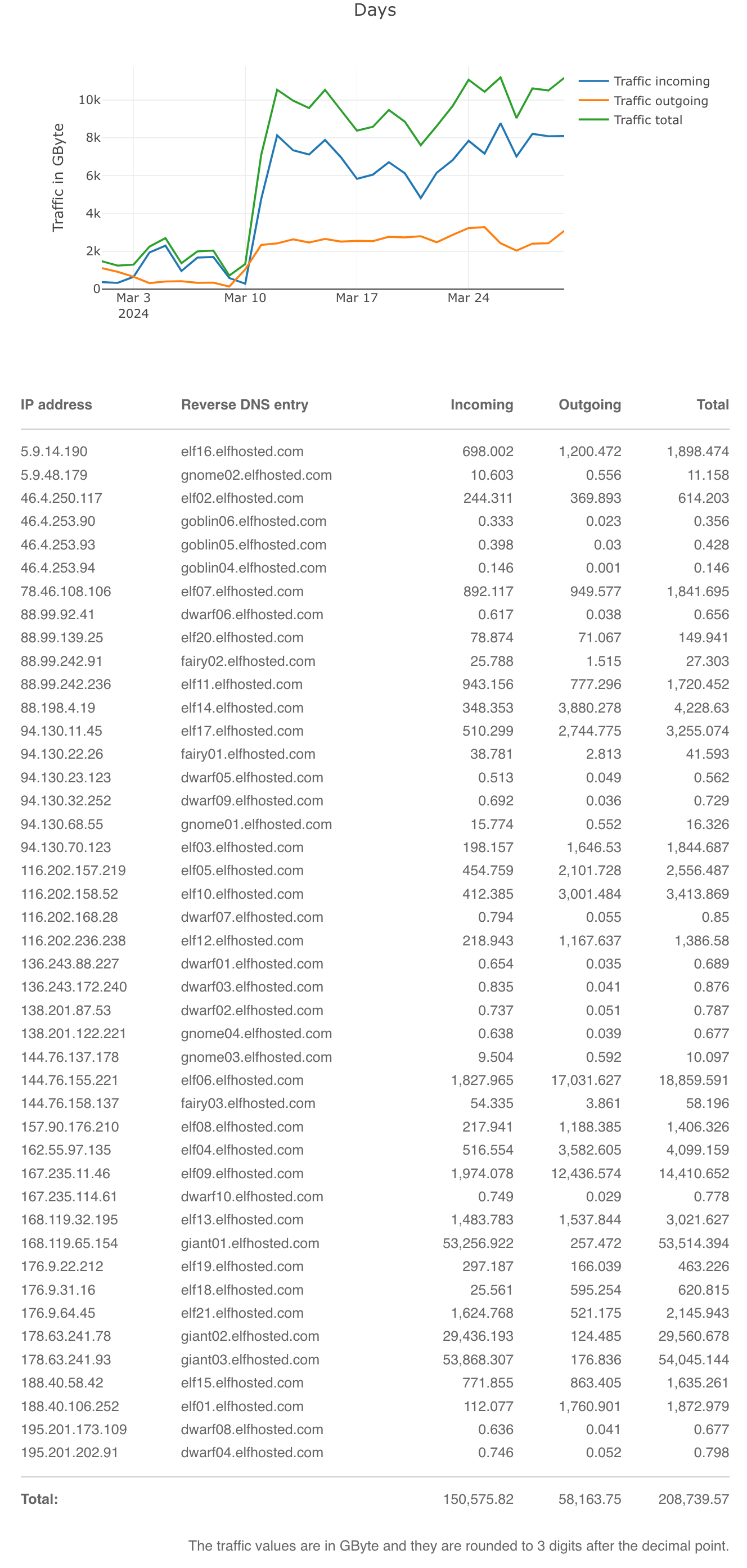

These are the traffic stats for egress from Hetzner. They exclude any traffic to/from Hetzner Storageboxes or ElfStorage, since this traffic is not classified as "external".

Ingress / Egress stats for Apr 2024

Last month (Mar 2024)'s for comparison:

Ingress / Egress stats for Mar 2024

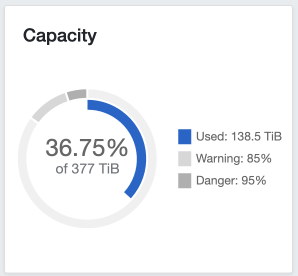

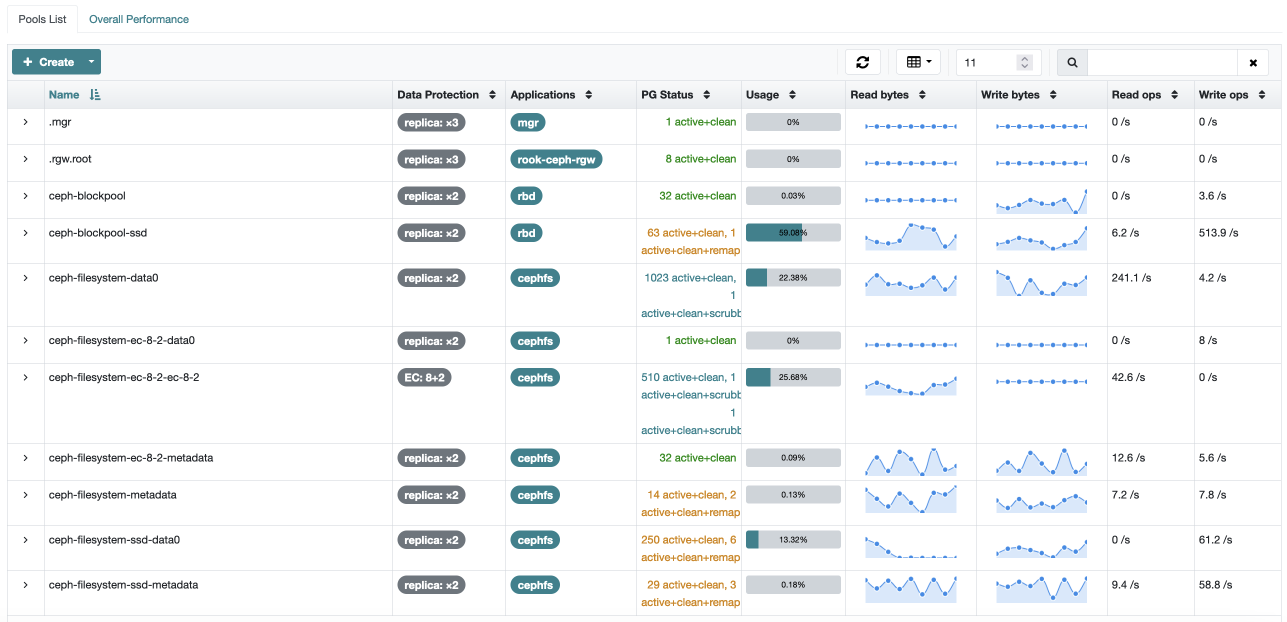

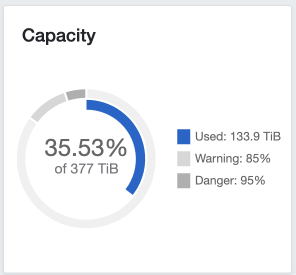

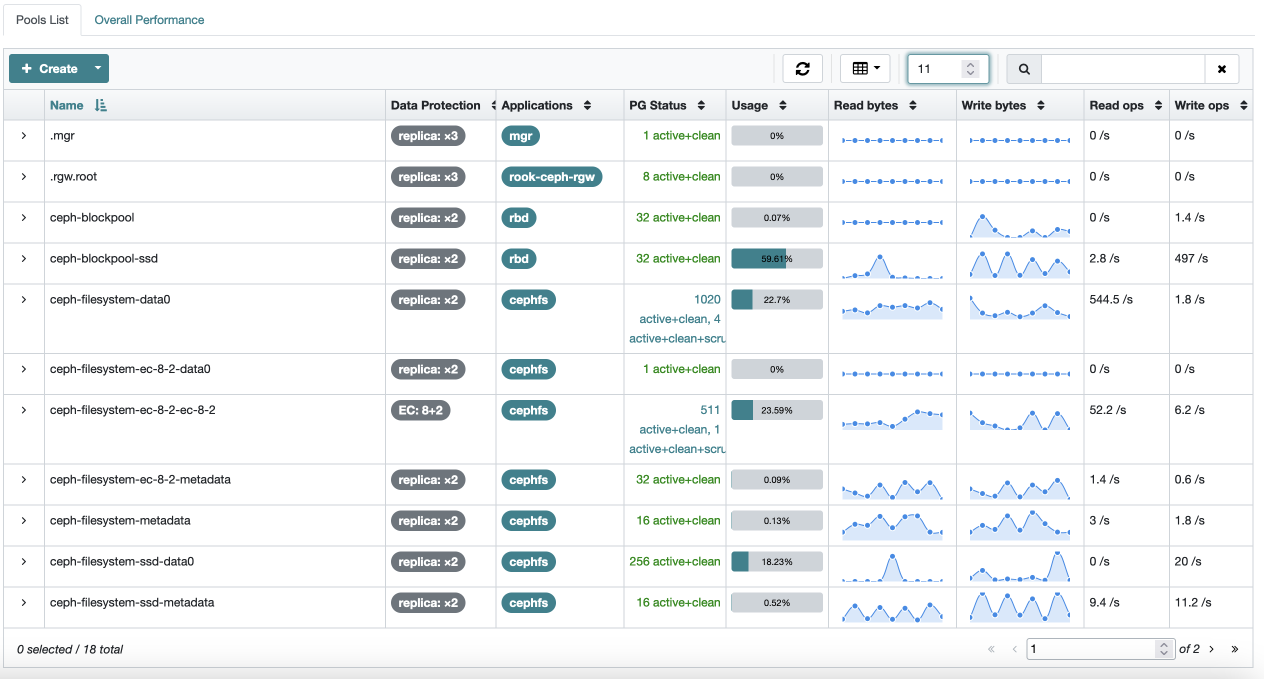

Ceph provides our own storage ("[ElfStorage][elfstorage]"), typically used for long-term slow storage and seeding, as well as all the storage for our per-app /config volumes, now backed by 10Gbps nodes with NVMe disks.

Ceph storage only grew ~5% 30% during April, but it should be noted that during the period we switched to ephemeral storage for the PhotoTranscoder directories, which significantly reduced Plex's storage consumption.

Last month (Mar 2024)'s for comparison:

Retrospective

100% more ElfVengers!

While producing this report, we graduated 2 more Elves (@chris and @erythro) to "Elf-Vengers", the elite team of heroes who help new elves with their setups, and respond to #elf-help tickets when available / applicable.

What about the

?

Elf-Vengers who respond to user tickets have been vetted to be "not catphishers"!

If you'd like to join our hallowed ranks, start here!

Cluster updated with IPv6 "in-flight"

Our Kubernetes cluster was built on IPv4 addressing only (no IPv6). Adding IPv6 after cluster initialization (making it "dual-stack") is not supported in K3s (but it turns out it works anyway)...

During April 2024, RealDebrid surprise-changed their policies, restricting non-residential IPv4 addresses, which impacted our "Infinite Streaming" users, since we mount your RealDebrid libraries from our Hetzner datacenter IP ranges. At the time of the change, even switching to a VPN for the Zurg->RD connection didn't help, since VPNs were also blocked (this may have been reversed by now).

To restore access to our users, we enabled IPv6 addressing in our cluster (technically, because we use Cilium and not K3s-managed Flannel, this was possible, but.. hacky), forcing the Zurg->RD connection via IPv6. This resolved the problem (for the time being!), and services were restored. It did take a few more days for the after-effects of this change to be fully resolved though, and we experienced connectivity issues across our apps we dealt with IP address allocation overlaps and other consequences of making such a wide-reaching change with relatively little planning/testing!

We've noted no further issues for about a week since, and our cluster is now fully IPv6-enabled and ready for the "future"!

Plex Performance Tweaks

Some daily network usage analysis (thanks to the public ElfHosted cluster Grafana dashboard) revealed Plex to be doing multi-GBbit/s downloads from RealDebrid / ElfStorage on a daily basis, for the purposes of analysing media for chapter markers, credit/intro skipping, etc.

Since such activities are obviously not scalable when dealing with remote storage mounts, we've disabled these scheduled tasks, and carefully tuned the transcode killer to prevent unnecessary thrashing of CPU/GPU resources.

More details in this blog post

KnightCrawler parser updated

The first ElfHosted Stremio Addon was a hacky, untested build of iPromKnight's monster rewrite of the backend components of the original torrentio open-source code.

It served us well from Feb 2024, and was my introduction to the wider StremioAddons community, but the rapid pace of the KnightCrawler devs outpaced our build, and so while fresh builds were prancing around with fancy parsers and speedy Redis caches, we ended up with a monster MongoDB instance (shared by the consumers, and public/private addon instances), which would occasionally eat more than its allocated 4 vCPUs and get into a bad state!

To migrate our hosted instance to the v2 code, we ran a parallel build, imported the 2M torrents we'd scraped/ingested, and re-ran these through KnightCrawler's v2 parsing/ingestion process. Look at how happily our v2 instance is purring along now!

More details in this blog post

RDTClient (auto) updated to mainstream

For a few months, we'd been stuck on an older version of RDTClient, since our cluster-specific hardening (not running as root, for example) didn't work with the latest, "official" version.

With some careful testing by a handful of users, we were able to adjust our image to better align with the upstream code, and we now have an in-house image which is both compliant with our internal security standards, and is able to be auto-updated from upstream when new features are released.

More details in this blog post

Stremio Server added

With the repricing behind us, we had time to focus on a significant release - a service which runs an instance of Stremio Server, the transcoding / torrenting component of Stremio, usually bundled with the client app. With the addition of a user-supplied VPN config, this service provides a user with a separate streaming/transcoding endpoint for Stremio, such that they can point their Stremio clients (like under-powered smart TVs) at their own streaming server, and have the torrenting / transcoding process offloaded, allowing users to:

- Safely stream direct torrents without worrying about a VPN

- Simultaneously stream Debrid downloads from supported clients (currently just https://web.strem.io or our hosted Stremio Web instance)

More details in this blog post, plus a step-by-step guide to simultaneous Stremio streaming!

What's coming up?

Video Guides

I've toyed with the idea of producing video guides for some of the more complex integrations, and during May I hope to try my hand at producing some screencasts to make these more accessible. If you've got an interest in screencasting / video production, and you'd like to help a noob out, hit me up!

TRaSH is TReaSUre

Informally, in the #elf-friends channel, we've been discussing implementing TRaSH Guides custom formats for several weeks now, and many users are using these to download the optimal content for directplaying (always better than transcoding!). We've also recently published @LayZee's Radarr/Sonarr guides to manually adding TRaSH to your Aars.

There's a little more work to do around the ElfBot implementation (elfbot recyclarr sync works, but some of the other commands don't), and then we'll publish a blog post with more details!

AirDC++ service

We've had an AirDC++ service in beta for a while, and with the support of a few invested Elves, this is now ready for "gen-pop". With a dusting of documentation and process, look for the AirDC++ service to become available shortly!

PostgreSQL support for the Aars

Radarr, Sonarr, etc all now have built-in support for PostgreSQL as a replacement to the troublesome and easy-to-corrupt SQLite database they come with by default. To support our KnightCrawler database, I've started using CloudNativePG (CNPG) for full-lifecycle database management. With a simple CR, CNPG will establish a highly-available PostgreSQL cluster, including automated failover, incremental and automatic backups to local snapshots and to an S3 bucket.

This declarative approach to creating a PostgreSQL database could allow us to, in bulk, create individual database for Arr instances, such that setup is still fully automatic, and users just "get" the PostgreSQL-enabled instances. Once again deferred by more pressing issues in April, I hope to pay more attention to this in May!

Offering free demos

Nothing gets my attention on a new app like a live demo. I've considered reaching out to open source projects who don't have their own online demo, and offering to host a self-resetting demo for them.

This approach has been successful with the Stremio Addons, and I think it'd be effective at gaining exposure to more niche communities.

A hosted demo would provide their potential users the opportunity to evaluate the app "live", and would also drive more traffic / recognition / SEO juice towards ElfHosted.

If you've got an open-source, self-hosted app and you'd like a free demo instance hosted, hit me up!

(We are currently donating torrent hosting to https://freerainbowtables.com)

Your ideas?

Got ideas for improvements? I'd love to hear them, post them in #elf-suggestions!

How to help

If you'd like to make a donation in recognition of our infrastructure costs, our open-source resources, or our friendly support, a simple donation product is available at https://store.elfhosted.com/product/elf-love/

Another effective way to help is to drive traffic / organic discovery, by mentioning ElfHosted in appropriate forums such as Reddit's r/selfhosted, r/seedboxes, r/realdebrid, and r/StremioAddons, which is where much of our target audience is to be found!

Join us!

Want to get involved?

Want to get involved? Join us in Discord and come and test-in-production!

-

All moneyz are in US dollarz ↩

-

For simplicity's sake, we're only presenting stats for https://elfhosted.com here, and not https://store.elfhosted.com. Fully transparent traffic details are available for elfhosted.com and store.elfhosted.com ↩↩

-

Includes "opportunity cost" of deferring billable consulting work for ElfHosted development! ↩

-

Yes! We finally broke even on our cash expenses. This was the first month we offered prepaid pricing though, and several users took advantage of yearly commitments, so next month may not be as profitable. We remain cautiously optimistic... ↩↩↩

-

These are actually paid yearly in advance, but represented here monthly for consistency. Confirm my sponsorships here ↩

-

Total pod count dropped because we (a) improved pruning of un-subscribed services, and (b) removed rclonefm/ui by default, but made them free to add, in the store. ↩