WARPing around RealDebrid blocks with VPNs

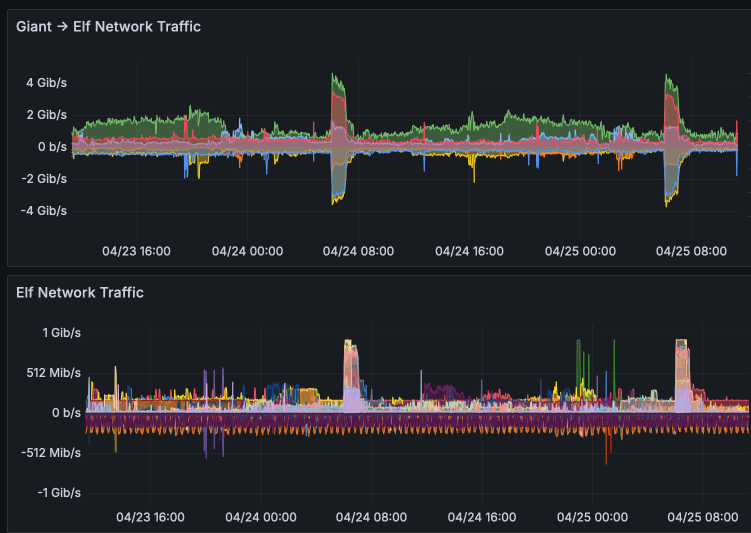

About 24h ago (just as we ran a big Riven rollout), RealDebrid finally closed the IPv6 loophole which was allowing us to Zurg with RealDebrid from datacenter IPv6 range.

We added a new product / process to allow us to connect your pods (Zurg, in this case) to your own, existing VPN. On the plus side, it's extremely flexible, since gluetun supports a huge range of VPN providers. On the downside, it requires some user geeky interaction, which I explain in this video:

The solution works great, and several our of geekier elves validated it with Mullvad, Nord, ProtonVPN, and PIA. This was only a small subset of the affected users, and the process of VPN-ifying 100+ Zurg pods was looming unpleasantly...

And then our newest Elf-Venger, @Mxrcy, pointed me a container image for CloudFlare WARP, which can be used as a SOCKS5 proxy in Zurg 0.10, with zero manual config! After a bit more refactoring, we were able to reunite all users with their beloved RD libraries via CloudFlare WARP!

However...